Eventual Technology

Position: Company Director / CTO Duration: 5 years

Decscription: I enjoy being a hands-on developer so in 2019 I decided to setup a limited company called Eventual Technology and began contracting. Although most projects have ended up being inside IR35, so through umbrellas or on fixed term contracts (PAYE).

Here I will outline the contracts and work done at those places.

GÉANT

Date 2024-01-01 Position: Software Architect Duration: 7 months Location: Cambridge (+ 2 days remote WFH)

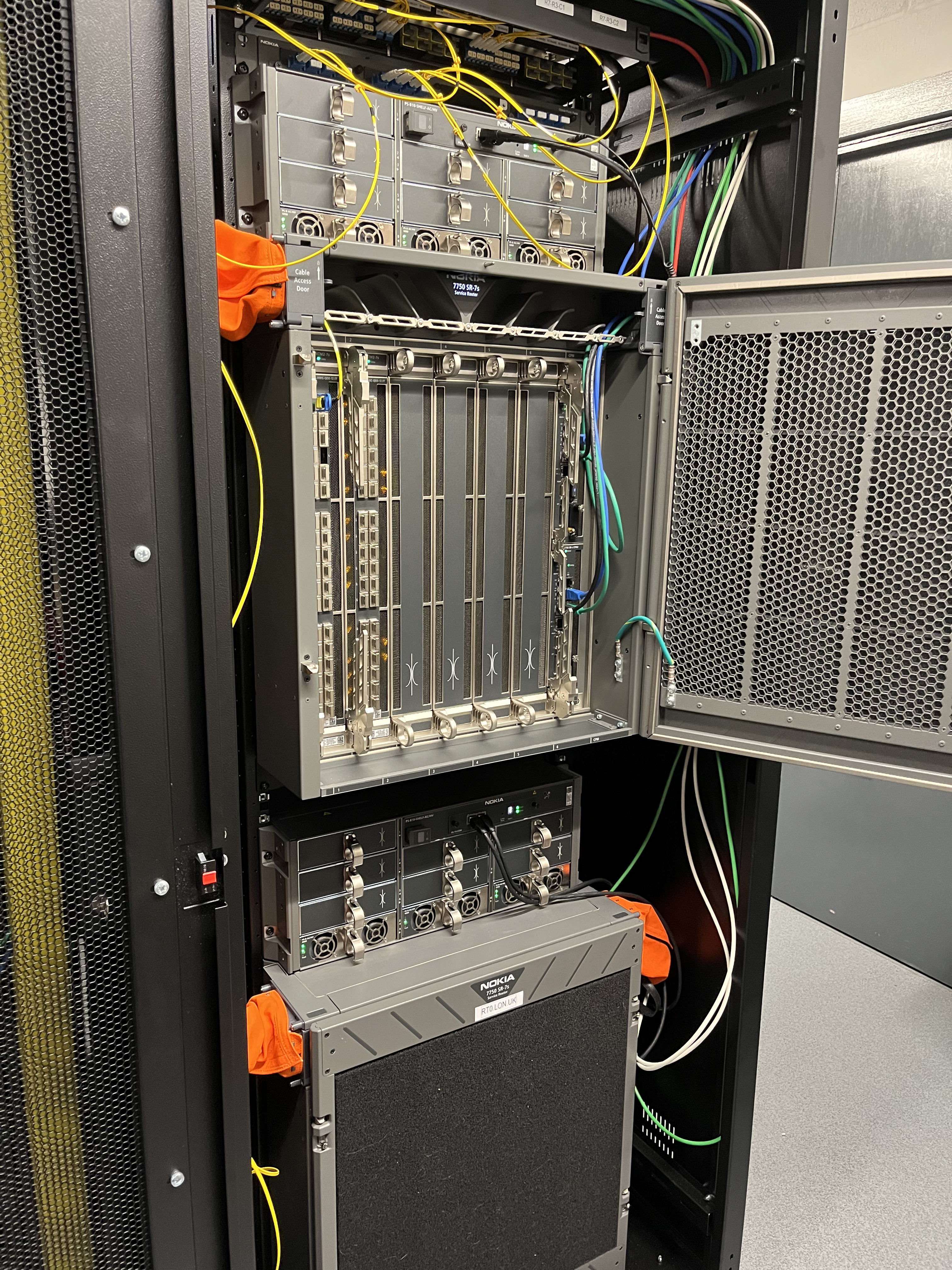

Roles and Responsibilities: After several months break travelling, I started working again in Cambridge for a company called GÉANT as a Software Architect and was involed in 2 key projects. GÉANT interconnect national research and education networks (NRENs) across Europe. This means they run fibres between countries to join their universities together. Researchers enjoy an unfiltered backbone internet. GÉANT basically installs the routers and monitors the physiscal connections between these routers typically at 2 datacentres per country (fallback).

These aren't your standard routers

These aren't your standard routers

-

Developmenet of a new 'dashboard' monitoriing system. We ultimately vetted and worked with an open source tool called Argus.

The team had a 'dashboard' that collects 'traps' from all the routers at datacentres in each country. The dashboard is an alarm aggregator which picks up alerts from other systems which detect issues around the network of Eurpoean university routers and the relays between. The existing system was probably over 2 decades old and built in Java. A refresh was severaly overdue however the NOC (First line support) were familiar with the legacy system and resistant to change. Another NREN called Sikt was building a similiar alarm aggregator but theirs didn't quite fit the needs of GÉANT. My job was to undertake a GAP anaylysis of the existing system and Argus before then creating an RFI and SOW so that we could work with them to fill the gaps and develop a new replacement dashboard. It was decided to scrap the React UI on their system and rebuild it with htmx as they already had a pretty sturdy Django backend.

Don't you just hate it when they want to email your picture around the office

-

OS migration of some softwares and services onto new hardware and operating system.

They had many projects spread across many servers with a several year old version of Centos running. The challenge was to move it all onto Ubuntu with zero downtime. They had puppet in place so it was relatively simple however they wanted to upgrade at the same time as migrate. This meant RabbitMQ, MariaDB the OS and python version all needed to be upgraded during a migration. This was not cloud based as they were running all their own hardware. I created an agile workflow for the test servers to be rolled across the other environments.

The idea was to bring 3 new machines onto the cluster, then turn off the old 3. Which kinda worked in principle but was a little fiddly in practice (RabbitMQ). That's 3 new machines per service, per environment.

99% of projects were using the ELK stack. Every project looked the same as a result. An unskinned Kibana dashboard over an elastic search backend. There was little in the way of design and there were no real front-end developers in the team. Also no QA. This was largely due to it being internal only clients and softwares.

I enjoyed the complexity of the projects and Thursday drinks, however in the end staying at hotels 3 nights a week in Cambridge was detrimental too my physcial health. I actually gained over 15kg, probably from eating junk food and takeaways. It was a good experiment but London is more of a practical commute.

Another night in a Travel Lodge

Another night in a Travel Lodge

Department of Levelling Up, Housing and Communities (gov.uk)

Date 2023-01-01 Position: Contract Python Developer Duration: 6 months Location: Fully Remote (except 2 days in the Birmigham Head Office)

Roles and Responsibilities: I contracted at the Department for Levelling Up Housing and Communities (DLUHC) as a Python specialist in the analytics team on behalf of Zaizi. I was brought in as extra support to help with a significant workload ahead of the soft launch of their product.

The team consisted of DB admins, mathematicians, and statisticians, and I was responsible for developing validation tests to ensure data integrity for the department's 'Ministerial Dashboards'. These dashboards provided MPs with visual tools to assess a vast array of data points, such as public infrastructure, education, and demographics across various regions.

I co-developed a suite of over 1,000 validation tests to prevent regression issues and ensure accurate data ingestion from various sources. This involved working with Windows SQL Server, a new tool for me, but one that quickly became essential to the project. We created SQL views to pull through the data for specific councils and areas of responsibility, organized by year. These views allowed us to dynamically retrieve data based on region, time period, and governing body, providing clear insights into local and national government metrics. The tests reduced errors, caught outliers, and ensured data consistency before deployments. Working in an agile environment, we collaborated closely to build a robust, well-documented pipeline, and I felt proud to contribute to such a professional and impactful project.

The project involved setting up an automated pipeline to collect data from multiple including APIs, spreadsheets, and integrating with one department’s initiative to create a centralised government database to stop duplication of efforts across departments. This required careful coordination between local councils and central government to ensure clarity on which data sets were owned and managed at each level. We also created notification services about upcoming or obsolete data sets.

Our agile process included task scoring, refinement sessions, definition of done, acceptance criteria, WIP limits, and retrospectives. Daily stand-ups were focused on the tasks and Kanban board, rather than individual performance, fostering a collaborative and efficient work environment.

UBS Bank

Date 2022-06-01 Position: Prototyper Duration: 3 months

UBS Bank

Roles and Responsibilities: I worked at UBS on a prototype project for the group treasury to build an early warning and reporting system for Proft or Loss (PoL) margins over £100k . This poject was centred around the idea of "Corporate Memory". Basically when there's a PoL over 100k a bunch of people get in a room and what's decided in that room is effectivly lost into the ether of converstations and emails. The job was to prototype a system that could capture that data for analysis by AI that might be able to inform on future PoL's. The tool was built using Microsoft technology.

During this role, I received training in finance to better understand the project’s financial context. I also had to learn and work with Microsoft PowerApps, a low-code platform used to build applications efficiently, and Microsoft Dataverse, which integrates with PowerApps. We also used the low-code / no-code tools for automation like sending notifications.

At the end of the contract it was requested that I present the solution on the trading floor to over 40 internal staff members to try and sell-in the utility of Power Apps.

This work was done via the company Synechron who hired me for the role in a fixed term contract and finished after the prototype was complete.

ReImagine Digital

Date 2020-01-01 Position: Interim CTO Duration: Several months

Clients: - Geidea - Fladgate - Venari - DAR

Roles and Responsibilities: ReImagine Digital had been outsourcing all their development since inception. The owner had sold his previous company to Infosys. This new startup had only a Project Manager. Their chosen stack was largely PHP Wordpress sites or Craft-CMS websites. With a focus on content updates they outsourced all software development to India. I was invited to join ReImagine to help address some key technical challenges and strengthen client relationships. During my time there, I successfully tackled critical issues, improved processes, and contributed to enhancing the company's reputation with its clients.

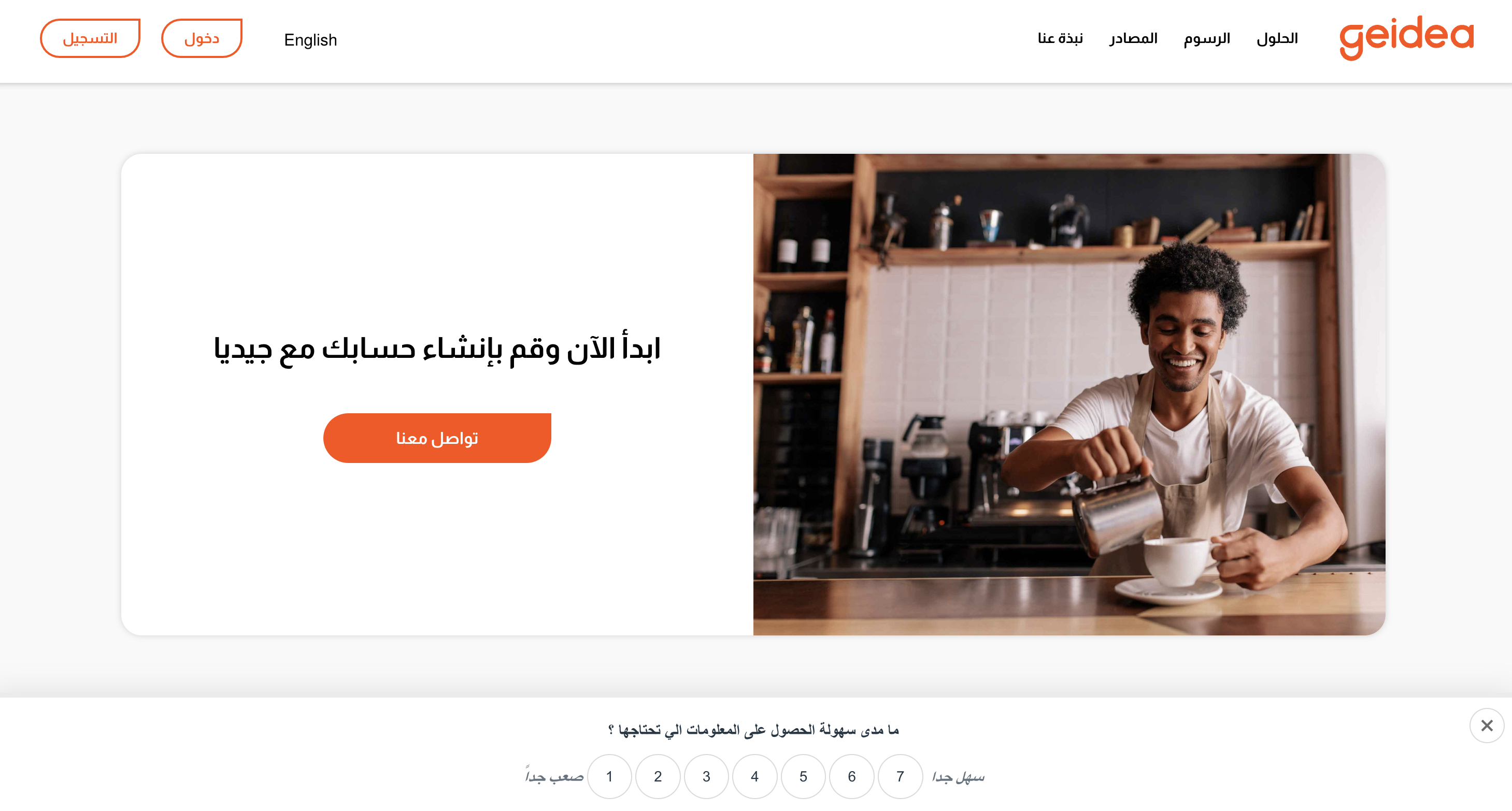

Geidea

Localisation of a Wordpress site for a client in UAE. This involved creating a clone of the existing Wordpress site and applying all the localisations. Additionally, I adjusted Apache configurations to support routes for the new locale. I also provided SEO recommendations and made adjustments to enhance the site’s visibility and performance. Things like cleaning code and optimising imagery and following recomendations after setting up Google Webmaster.

Arabic version

https://geidea.net/merchants/ar/

English version

https://geidea.net/merchants/en/

Fladgate

Roles and Responsibilities: Setup blue/green deployment on Fladgate to stop regression issues caused by outsourced partners deploying wrong versions of the website.

The main issue was that content was being added by Fladgate staff while outsourced partners were making ammends to a UAT environment. Without communication this had meant on several occasions they had deployed updates but accidently removed content created by internal users.

The solution would have been to sync the databases however this wasn't simple with Craft-CMS due to ID's that are created for specific peices of content. Some modules existed that promsied to do the work but were paid and untested. My solution was to put a different deployment methodology in place that ensured clients content changes were protected from being deleted.

Some content updates to get the video profiles of the lawyers working.

Venari

https://www.venarisecurity.com/

Setup nginx and sll certs for Venari's Craft-CMS website.

Get the site live, then create a dev environment. The outsourced dev was publishing from his own machine to the live environment and was leaving once the site was completed. So I created a UAT environment after the site went live and changed the deployment process. So not to see a repeat of Fladgate issues.

DAR Pitch

I used the Unreal Engine to create some renderings of a proposed Augmented reality app that would allow users to choose light fittings via an app and see them in their lounge.

HMLR (gov.uk)

Date 2022-01-01 Position: Contract HTML/Python Developer Duration: 6 months

Roles and Responsibilities: I worked for HMLR to digitise the form conveyancers use to transfer property deeds between people. The application was built using Flask and mostly involved writing Jinja templates and Flask Models. It was fun to work with the Gov design system and component libraries. There was a high amount of accuracy and code coverage and quality gates on the codebase. We had to Mock every possible type of edge case due to no one being allowed access to the actual database. We had to run many tests in the test environment and full suites of acceptance tests would be created for the UAT environment against a partial dataset before moving into the fully live environment where nobody had access.

This was an uber agile environment and I learned a lot of about mocking properly / unit testing and parameterising tests. Most tickets here were strictly Test Driven, they literally came first in 99% of cases.

Acceptance testing was typically done after development by another off-shore team, but ideally, IMO they could have been created upfront to help drive the development. Since QA and acceptance testing were outsourced, we often had to create videos explaining fixes due to the complexity and domain-specific knowledge involved. Conveyancers or product owners would describe the bug to me, after replicating and resolving it, I'd record a short video demonstrating the fix for the reviewers. This "each-one-teach-one" approach helped streamline the review process.

Here are some video examples of various issues addressed. Usually I work in strict NDA environments and couldn't show this kind of level of development. But gov.uk is completely transparent and is all open source.

Menzies

Date 2022-01-01 Position: Contract Python Developer Duration: Several months

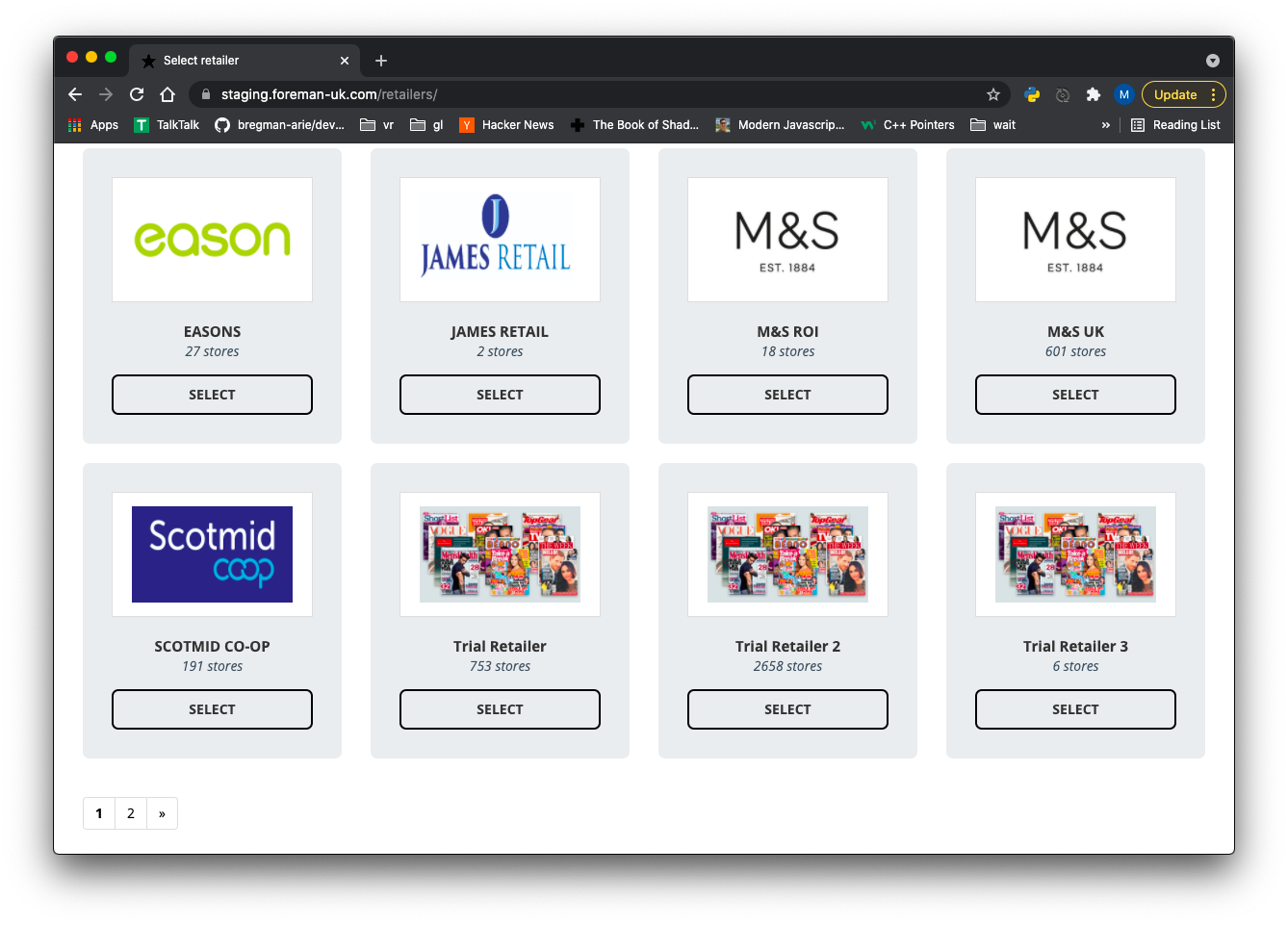

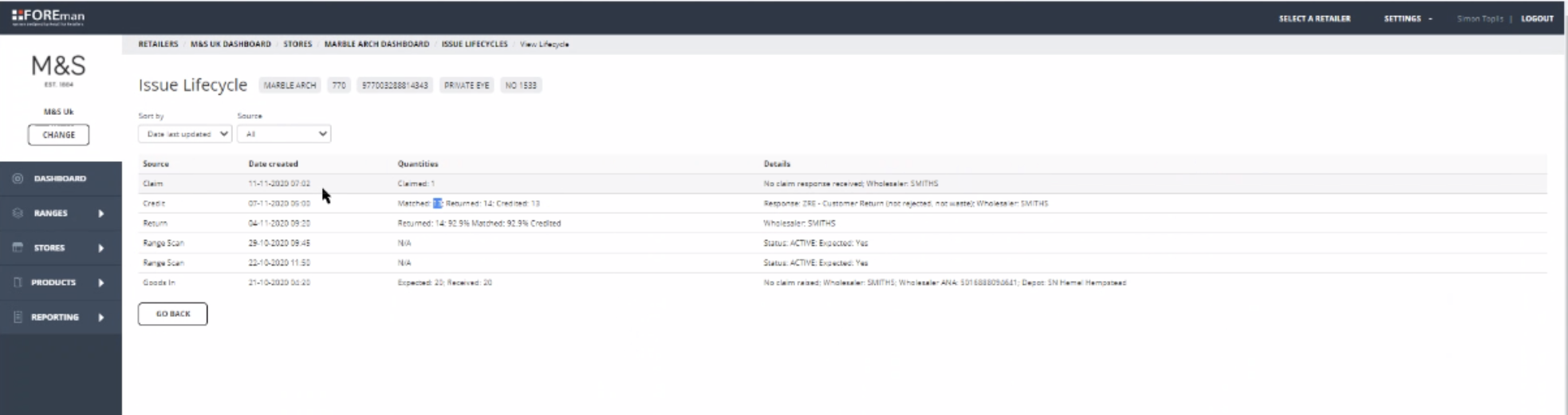

Roles and Responsibilities: I was hired directly by Menzies to improve their succesful 'Foreman' application and help recruit a full-time developer to manage day-to-day operations, while rolling out the product to thousands of new users.

The role included upgrading their Django application from version 1.0 to 3.6. I had to gradually walk it up through some minor versions due to various plugins. I also automated spreadsheet ingestion and resolved API issues with a caching strategy.

Foreman is used by Tesco, Marks & Spencer, and newsagents across the UK to manage newspaper and magazine inventories by scanning barcodes via a mobile app, which tells sellers when to return magazines (Sale or Return).

The challenge was scaling to 30,000+ simultaneous users in its 3rd year of useage. About midday they would begin scanning the newspapers and the REST API would get hit really hard. I used nginx amplify to identify which API calls were most problematic. This issue was not about inefficient database queries but more about a caching strategy. After setting an nginx cache on some of the read only endpoints i.e. daily inventory download. Only about 10% of traffic needed to even hit the appplication layer. This fixed the slow responses users were getting in-store.

Another thing I noticed was despite being 3 years old and apparenlty up to 15 developers working on it. No one had bothered to change the default config file for postgres. Meaning that the database wasn't fine tuned for the type of traffic it was getting. I was able to change many settings to bring the cache hit ratio closer to 0.9.

Also the database had millions of rows in some tables as nothing had ever been archived and they were also logging to the database rather than a syslog server or service. While application logs were sent to Sentry, a log table was collected every request and growing exponentially every day. I archived out old data and upgraded the database to a newer version.

Another issue was lingering containers. They were running all their environments on the same server using containers. so a test container, uat container and live container all on the same box. This would cause feeds to be ingested by all 3 environments at the same time and choke the resources on the box. I had to resolve this issue and worked with Menzies IT to set up new environments on Windows Azure.

We also moved and staggered time that the apps downloaded the database to the evenings. And moved some of the spreadsheet ingestion code out of the Django app into its own services. Also I noticed BA users connecting to the main database during operating hours so we built a replicated database for them to connect to instead. Moved DB backups to be overnight. Automated vacuum of the postgres DB and upgraded it to latest version. Created a UI to do the ingestions so the client could run them rather than needing a dev to run them on the command line.

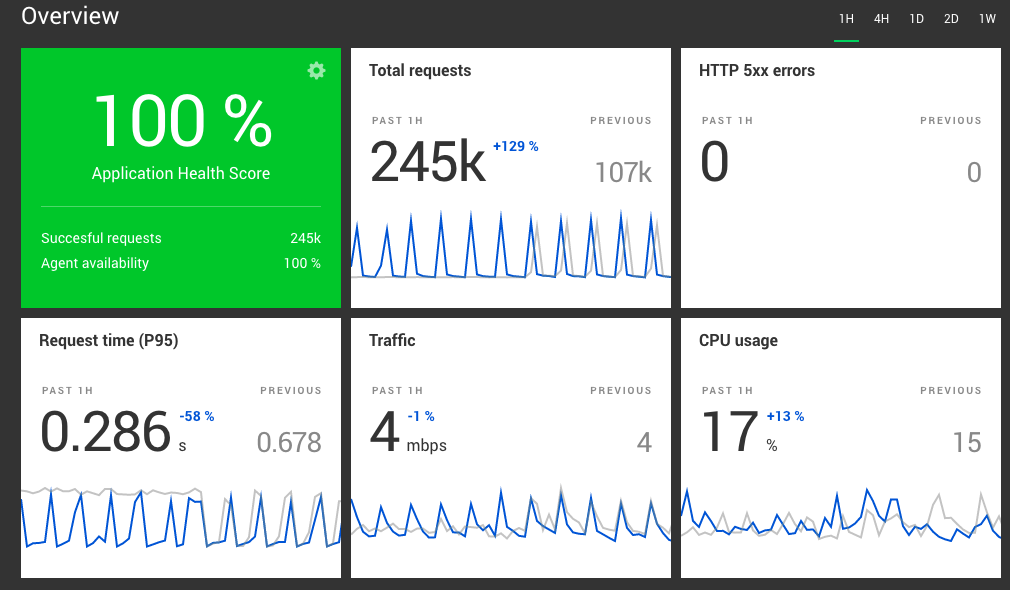

Eventually I got it looking healthy. 1000x improvement. Here's a snapshot of stats for 1 hour at peak times. Given that we were dropping 75% of request previously and cpu was choked. Its a great result.

Largely from having an nginx cache, optimising the Postgres config, vacuuming the db, Memoizing the odd function within the app.

Can I sleep now? 😂

Can I sleep now? 😂

Sponge

Date 2021-01-01 Position: Software Architect Duration: Several months

Roles and Responsibilities: During Covid lockdown I had trouble finding work in London and came across a company in Scotland called Sponge that were looking for someone to help out. They allowed me to work remotely.

Sponge Learning had recentliy aqcuired a company called Mercury Tide. Mercury had lost much work during lockdown and was gong bankrupt. They had some IP in a database that housed the training details of millions of staff which included Tesco staff. They had laid of 20 developers and Sponge had aquired the company to get their hands on this database as well as the relationship with Tesco. However there were several clients and pieces of software that Sponge were not interested in. That's why I was hired.

Menzies

The job was to complete outstanding contracts with clients they were waving goodbye to. One of which was Menzies. It was nothing personal just that Sponge only deal with 'learning' softwares and training programs so a lot of these other websites for local councils in Scotland or small eateries etc weren't required by them. However maintaining them was coming at a cost. The particular job I was assigend to was a tool for Menzies which was used to credit retailers with the sale or return of newspapers and magazines. There were several outstanding tickets in Jira and an attempt had been made to outsource the work to for some reason a PHP devlepoer, which had gone badly as the site was built in Django which is Python. It became effectively my first commerical Django gig. I fixed the problems and got the client smiling enough to sign of the work.

When I joined the project all the previous developers had left without a trace. So I had to unpick a lot. Luckily the env vars weren't encrypted and that's where I found the db passwords. I quickly created a dev guide for anyone else that might join to log my findings.

Tesco

I provided some consulation and a prototye for a serverless application for Tesco.

Theia

I helped another client called Theia migrate their mutlie tenant django app away from Sponge servers and onto their own architecture.

BT Sport

Date 2019-01-01 Position: Contract Full-Stack Developer Duration: 6 months

Roles and Responsibilities: I started at BT Sport in the Winter of 2019 just before the Covid lockdown. My role was to cover for someone who was suddenly leaving while they hired a new developer to run the team. There was a brief 1-2 week handover of a system that trafficked sports events between Sky TV and BT.

Basically the football rights were split roughly 50/50 between Sky and BT for live games. However both services shared the games on catch up or watch later services. So various technologies were co-developed to share the football games between each other. Other sports were also included i.e. boxing matches. But the majority of the pipeline was built around the football.

There was also some integration with the BT home hub for tv on demand and music streaming etc.

There was a shared database system called bebanjo for transffering meta data between the 2 companies. i.e. descriptions and thumbnails for rare back catalog items.

I had several responsibilities here

-

learn as much as possible about the main tool / system as to hand over to the next incumbent

It appeared to be the largest python file I've ever seen in my life. probably tens of thousands of lines in one huge workflow with catches for every possible kind of exception that would log to Sentry. Also a React front-end for viewing existing workflows or manually creating new ones. The project was called 'Ocean' and was for the processing of all the media files to make available for the set top box.

-

develop software to interact with the onsite encoders Ateme

For example BT did a brand update. So we had to iteract through the entire back catalog and trim off the first 2 seconds of video where their logo was embedded and update the log and save it. This would run as batch jobs for days. There were also many tools for quality checks / verifying etc

-

creating automations for batch updating bebanjo

Bebanjo's API wasn't complete. So you couldn't for example update the thumbnails for the images in the system. As they are 'partially managed' we met with them and asked them to update the 100k+ images due to 4K and the need for better screens to have better thumbnails. However they wanted a vast sum of money. The solution was to create a Selenium bot and do it via the web UI and run it for several days obvioulsy within the terms of service.

-

Mass updates to data...

My first look at a commercial lambda function. Involved a lambda that could spawn more lambdas. One lambda would read a spreadsheet and creete a new lambda for every row in teh spreadhseet to post data updates to the bebanjo API. however they asked us not to post so many simoultaneous requests.